I loathe putting my thesis first (the thesis-first tradition is directly descended from people who didn’t actually believe that persuasion is possible), but here I will. The way that a lot of liberals, progressives, and pro-democracy people are talking about GOP support for authoritarianism is neither helpful nor accurate. Both the narrative about how we got here and the policy agenda for what we should do now are grounded in assumptions about rhetoric that are wrong. And they’re narratives and assumptions that come from the Enlightenment.

I rather like the Enlightenment—an unpopular position, even among people who, I think, are direct descendants of it. But, I’ll admit that it has several bad seeds. One is a weirdly Aristotelian approach of valuing deductive reasoning.

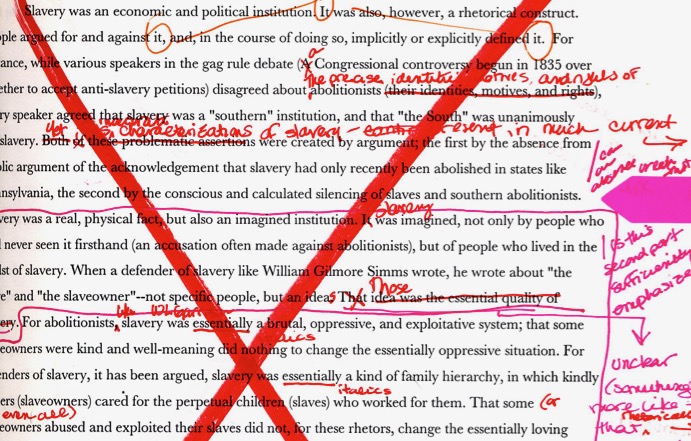

In an early version of this post, I wrote a long explanation about how weird it is that Enlightenment philosophers all rejected Aristotle but they actually ended up reasoning like he did—collecting data in service of finding universally valid premises. I deleted it. It wouldn’t have made my argument any clearer or more effective. I too am a child of the Enlightenment. I want to go back to sources.

Here’s what matters: syllogistic reasoning starts with a universally valid premise and then makes a claim about a specific case. “All men are mortal, and Socrates is mortal, so Socrates must be mortal.” Inductive reasoning starts with the specific cases (“Socrates died; so did Aristotle; so did Plato”) in order to make a more general claim (“therefore, all Greek philosophers died”). For reasons too complicated to explain, Aristotle was associated with the first, although he was actually very interested in the second.

Enlightenment philosophers, despite claiming to reject Aristotle, had a tendency to declare something to be true (“All men are created equal”) and then reason, very selectively, from that premise. (It only applied to some men.) That tendency to want to reason from universally valid principles turned out to be something that was both liberating and authoritarian. Another bad seed was the premise that all problems, no matter how complicated, have a policy solution. There are two parts to this premise: first, that all problems can be solved, and second, that there is one solution. The Enlightenment valued free speech and reasonable deliberation (something I like about it), but in service of finding that one solution, and that’s a problem.[1]

The assumption was that enlightened people would throw off the blinders created by “superstition” and see the truth. So, like the authorities against whom they were arguing, they assumed that there was a truth. For many Enlightenment philosophers, the premise was that free and reasonable speech among reasonable people would enable them to find that one solution. The unhappy consequence was to try to gatekeep who participated in that speech, and to condemn everyone who disagreed—this move still happens in public discourse. People who agree with Us see the Truth, but people who don’t are “biased.”

The Enlightenment assumed a universality of human experience—that all people are basically the same—an assumption that directly led to the abolition of slavery, the extension of voting rights, public education. It also led to a vexed understanding of what deliberative bodies were supposed to do: 1) find the right answer, or 2) find a good enough policy. It’s interesting that the Federalist Papers vary among those two ways of thinking about deliberation.

The first is inherently authoritarian, since it assumes that people who have the wrong answer are stupid, have bad motives, are dupes, and should therefore be dismissed, shouted down, expelled. This way of thinking about politics leads to a cycle of purification (both Danton and Robespierre ended up guillotined).[2] I’m open to persuasion on this issue, but, as far as I know, any community that begins with the premise that there is a correct answer, and it’s obvious to good people, ends up in a cycle of purification. I’d love to hear about any counter-examples.

The second is one that makes some children of the Enlightenment stabby. It seems to them to mean that we are watering down an obviously good policy (the one that looks good to them) in order to appease people who are wrong. What’s weird about a lot of self-identified leftists is that we claim to value difference while actually denying that it should be valued when it comes to policy disagreements.

We’re still children of Enlightenment philosophers who assumed that there is a right policy, and that anyone who disagrees with us is a benighted fool.

Another weird aspect of Enlightenment philosophers was that they accepted a very old model of communication—the notion that if you tell people the truth they will comprehend it (unless they’re bad people). This is the transmission model of communication. Enlightenment philosophers, bless their pointed little heads, often seemed to assume that enlightening others simply involved getting the message right. (I think JQA’s rhetoric lectures are a great example of that model.)

I think that what people who support democracy, fairness, compassion, and accountability are now facing is a situation that has been brewing since the 1990s—a media committed to demonizing democracy, fairness, compassion, and in-group accountability. It’s a media that has inoculated its audience against any criticism of the GOP.

And far too many people are responding in an Enlightenment fashion—that the problem is that the Democratic Party didn’t get its rhetoric right. As though, had the Democratic Party transmitted the right message, people who reject on sight anything even remotely critical of the GOP would have chosen to vote Dem. Ted Cruz won reelection because he had ads about transgender kids playing girls’ sports. That wasn’t about rhetoric, but about policy.

We aren’t here because Harris’ didn’t get her rhetoric right. Republicans have a majority of state legislatures and governorships. This isn’t about Harris or the Dem party; this is about Republican voters. To imagine that Harris’ or the Dems’ rhetoric is to blame is to scapegoat. Blame Republican voters.

We are in a complicated time without a simple solution. Here is the complicated solution: Republicans have to reject what Trump is doing.

I think that people who oppose Trump and what he’s doing need to brainstorm ways to get Republican voters to reject their pro-Trump media and their kowtowing representatives.

I think that is a strategy necessary for our getting this train to stop wrecking, and I think it’s complicated and probably involves a lot of different strategies. And I think we shouldn’t define that strategy by deductive reasoning—I think this is a time when inductive reasoning is our friend. If there is a strategy that will work now, it’s worked in the past. So, what’s likely to work?

[1] The British Enlightenment didn’t make the rational/irrational split in the same way that the Cartesian tradition did. For the British philosophers, there wasn’t a binary between logic and feelings; for them, sentiments enhance reasonable deliberation, but the passions inhibit it.

[2] There’s some research out there that suggests that failure causes people to want to purify the in-group. My crank theory is that it depends on the extent to which people are pluralist.