[Another paper from the Rhetoric Society of America conference. For the conference, the paper is titled : “The ‘War on Christians’ and Preventive War.”]

This panel came about because of our shared interest in the paradox that advocates of reactionary ideologies often use a rhetoric of return in service of radically new policies and practices. Sometimes they’re claiming to return to older practices that either never existed or that are not the same as what is now being advocated, and sometimes they’re claiming that their new policies are a continuation of current practice when they aren’t. It’s not a paradox that reactionary pundits and politicians would use appeals to the past in order to argue for a reactionary agenda—in fact, pundits and politicians all over the political spectrum use a mythical past to argue for policies, and, if anything, it makes more sense for reactionaries to do it than progressives—the tension comes from appealing to a false past as though it were all the proof one needs to justify unprecedented policies.

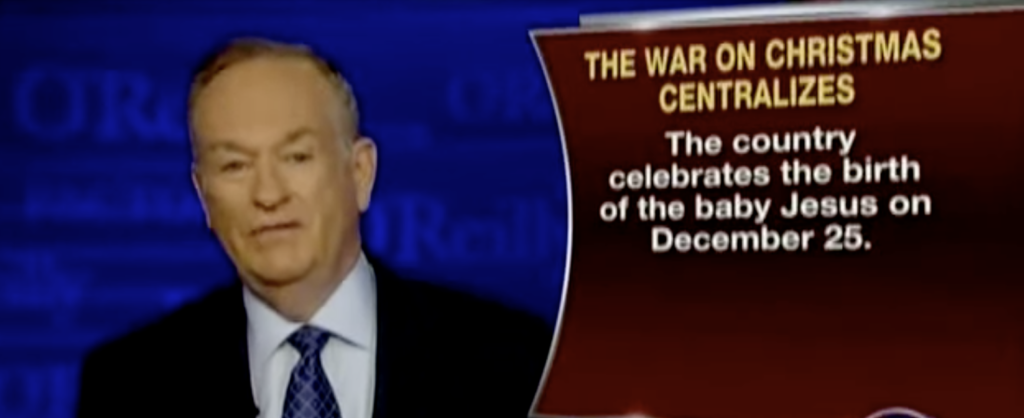

The false past is somewhat puzzling in various ways. It’s sometimes about apparently trivial points, such as the myth that everyone used to say “Merry Christmas!” It’s frequently appealing to a strange sense of timelessness, in which words like “Christian” or “white” have always had exactly the same meaning that they do now. It’s sometimes self-serving to the point of silliness– the plaint that “kids these days” are worlds worse than any previous generation. The evidence for these claims is often nothing more than hazy nostalgia for the simple world of one’s youth, so that the fact that as children we were unaware of crime and adultery is taken as proof that they didn’t happen in those days.

At first, when I started running across this odd strategy, I thought the rhetoric of return was essentially a kind of rhetorical diversionary tactic, born of necessity. People are naturally resistant to new policies, especially people likely to be attracted to reactionary ideologies, and engaging in reasonable policy argumentation is hard, especially if you don’t have a very good policy. People rarely demand that a policy be defended through argumentation if it’s the status quo, or a return to past successful policy, and that kind of makes sense. What that audience tendency means is that a rhetor who wants to evade the responsibilities and accountability of policy argumentation can try to frame their new policy as a return to a previously successful one or a continuation of the status quo. This is nostalgia as a diversion from deliberation and argumentative accountability.

But I now think that’s only part of it.

I think it’s a rhetorical strategy oriented toward radicalizing an audience in order to persuade them to engage in a preventive and absolute war, thereby granting in-group rhetors complete moral and rhetorical license. I’m arguing that there is a political strategy with four parts. Reactionary rhetors strategically falsify the past and/or present such that some practice (e.g., celebrating Christmas as we do now) is narrated as something all Americans have always done, and therefore as constituting America. Another strategy is to insist that “liberals” are at war with “America,” as evidenced by their determination to exterminate those mythically foundational practices (such as celebrating Christmas). Because liberals are trying to exterminate America, the GOP should respond with preventive and absolute war—normal political disagreement is renarrated as a zero-sum war in which one or the other group must be exterminated. The goal of those three strategies is to gain the moral and rhetorical license afforded by persuading a base that they are existentially threatened.

I. Strategic Nostalgia

Take, for instance, abortion. The GOP is not proposing returning to the world pre- Roe v. Wade; they are advocating a radically new set of policies, much more extreme than were in place in 1972. In 1972, thirteen states allowed abortion “if the pregnant woman’s life or physical or mental health were endangered, if the fetus would be born with a severe physical or mental defect, or if the pregnancy had resulted from rape or incest” (Guttmacher). Abortion was outright legal in four states. And while it was a hardship, it was at least possible for women to travel to those states and get a legal abortion.

GOP state legislatures are not only criminalizing abortion in all circumstances, even if forcing a woman to continue with a nonviable pregnancy is likely to kill her, but criminalizing miscarriage, criminalizing (or setting bounties for) getting medical treatment (or certain forms of birth control) anywhere, even where it’s legal. And it’s clear that a GOP Congress will pass a Federal law prohibiting abortion under all circumstances, as well as many forms of birth control, in all states. They are not proposing a return.

Or, take another example. In 2003, the Bush Administration proposed a radically new approach in international relations—at least for the post-war US—preventive war. But, as exemplified in Colin Powell’s highly influential speech to the UN (Oddo), this new approach was presented as another instance of preemptive war (the basis of Cold War policy).

II. Preventive War

To explain that point, I need to talk about kinds of war. When rhetors are advocating war, they generally claim it’s one of four kinds: self-defense, preemptive, preventive, and conquest. Self-defense, when another nation has already declared war and is invading, is a war of necessity. The other three are all wars of choice, albeit with different degrees of choice. A preemptive war is when one nation is about to be attacked and so strikes first—it’s preemptive self-defense against imminent aggression. A preventive war “is a strategy designed to forestall an adverse shift in the balance of power and driven by better-now-than-later logic” (Levy 1). Preventive war is about preserving hegemony, in both senses of that word.

Nations or groups engage in preventive war when they believe that their current geopolitical, economic, or ideological hegemony is threatened by an up-and-coming power. And I would note that white evangelicals started pushing a rhetoric of war when their political hegemony in the South was threatened by desegregation and internal migration (Jones); the GOP increasingly appealed to various wars as data came out showing that its base was not far from national minority status (FiveThirtyEight).

While wars of conquest are common, and the US has engaged in a lot, it’s rare to find major political figures willing to admit that they were or are advocating a war of conquest. The only example I’ve found is Alexander the Great at the river Beas, and our only source for that speech was written two hundred years later, so who knows what he said. Even Hitler claimed (and perhaps believed) that his war of conquest was self-defense. Wars of conquest—ones in which the goal is to exterminate or completely disempower another group simply because they have things we want or they’re in our way—are rhetorically a bit of a challenge. So, pundits and politicians advocating wars of conquest avoid the challenge. They claim it’s not a war of choice, but one forced on us by a villainous enemy, and thus either self-defense or preemptive.

Wars of conquest are generally what the military theorist Carl von Clausewitz called “absolute” war,; that is, one in which we are trying to “destroy the adversary, to eliminate his existence as a State” (qtd in Howard 17). Absolute war is not necessarily genocide; but it is oriented toward making the opponent defenseless (77), so that they must do our will. Most wars, according to Clausewitz, can end far short of absolute war because there are other goals, such as gaining territory, access to a resource, and so on, what he calls political ends.

What I am arguing is that the US reactionary right is using strategic nostalgia to mobilize its base to support and engage in an absolute war against “liberals” (that is, any opposition party or dissenters), by claiming “liberals” have already declared such a war on America. Thus, it’s preventive war, but defended by a rhetoric of self-defense.

As Rush Limbaugh said, “And what we are in the middle of now, folks, is a Cold Civil War. It has begun” (“There is no”) and “I think we are facing a World War II-like circumstance in the sense that, as then, it is today: Western Civilization is at stake” (“The World War II”; see also “There is No Whistleblower”). And it is the Democrats who started the war (“What Happened”), actually, a lot of wars, including a race war. Again, quoting Limbaugh, “I believe the Democrat Party, Joe Biden, Kamala Harris, whoever, I think they are attempting, and have been for a while, to literally foment a race war. I think that has been the objective” (“Trump’s Running”).

If “conservatives” are at war with “liberals,” then what kind of war? If politics is war, what kind is it? The GOP is not talking about Clausewitz’s normal war, that is of limited time and proximate successes, but complete subjugation.

The agenda of completely (and permanently) subjugating their internal and external opponents is fairly open, as Katherine Stewart has shown in regard to conservative white evangelicals (The Power Worshippers). Dinesh D’Souza, in his ironically-titled The Big Lie, is clear that the goal of Republican action is making and keeping Democrats a minority power, unable to get any policies passed (see especially 236-243).

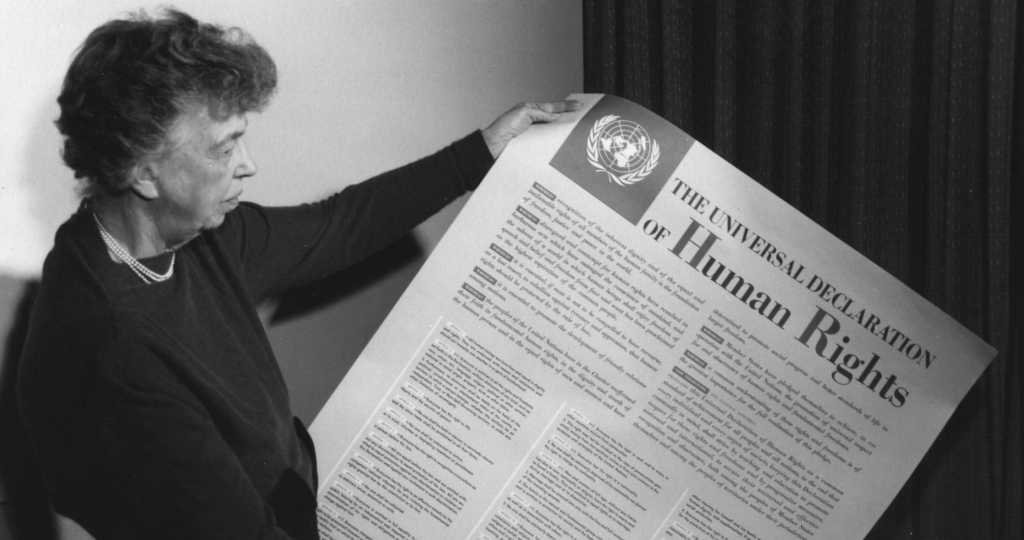

It is, in other words, a rejection of the premise of democracy.

III. Moral and rhetorical license

The conservative Matthew Continetti concludes his narrative of “the hundred year war for American conservatism” saying:

What began in the twentieth century as an elite-driven defense of the classical liberal principles enshrined in the Declaration of Independence and Constitution of the United States ended up, in the first quarter of the twenty-first century, as a furious reaction against elites of all stripes. Many on the right embraced a cult of personality and illiberal tropes. The danger was that the alienation from and antagonism toward American culture and society expressed by many on the right could turn into a general opposition to the constitutional order. (411)

(Paul Johnson makes a similar argument in his extraordinary book.) The explicit goal of disenfranchising any political opposition, the valorizing of the attempted insurrection, new processes for confirming SCOTUS nominees, voter suppression—these are a general opposition to the constitutional order. It is clear that many GOP-dominated state legislatures intend to overturn—violently if necessary—any election Democrats win. Georgia’s recent legislation, for instance, “gives Georgia’s Republican-controlled General Assembly effective control over the State Board of Elections and empowers the state board to take over local county boards — functionally allowing Republicans to handpick the people in charge of disqualifying ballots in Democratic-leaning places like Atlanta” (Beauchamp).

GOP pundits and politicians can be open in their attacks on other Americans, American culture, and American society by using strategic nostalgia to renarrate what is American, and thereby gain moral and political license. That is, radicalize their base.

By “radicalize,” I mean the process described by scholars of radicalization like Willem Koomen, Arie Kruglanski, or Marc Sageman, that enable people to believe they are justified in escalating their behavior to degrees of extremism and coercion that they would condemn in an outgroup, and that they would at some point in the past have seen as too much.

Koomen et al. say that “perceived threat is possibly the most significant precondition for polarization [and] radicalization” (161). That a group is threatened means that cultural or even legal norms in favor of fairness and against coercion no longer apply to the ingroup. There are three elements that can serve “both to arouse a (misplaced) sense of ingroup superiority and to legitimize violence”:

“The first is the insistence that the[ir] faith represents the sole absolute truth, the second is the tenet that its believers have been ‘chosen’ by a supreme being and the third is the conviction that divinely inspired religious law outranks secular law” (Koomen et al. 160).

Since they (or we) are a group entitled by a supreme being to dominate, then any system or set of norms that denies us domination is not legitimate, and can overthrown by violence, intimidation, or behaviors that we would condemn as immoral if done by any other group. We have moral license.

One particularly important threat is humiliation, including humiliation by proxy. That’s how the anti-CRT and anti-woke rhetoric functions. If you pay any attention to reactionary pundits and media, you know that they spend a tremendous amount of time talking about how the “woke mob” wants white people to feel shame; they frame discussions about racism (especially systemic racism) as deliberate attempts to humiliate white Christians. This strategy is, I’m arguing, a deliberate attempt to foment moral outrage—what Marc Sageman (a scholar of religious terrorism) says is the first step in radicalizing. He lists three other steps: persuading the base that there is already a war on their religion, ensuring a resonance between events in one’s personal life and that larger apocalyptic narrative, and boost that sense of threat through interpersonal and online networks.

The rhetoric of war, at some point, stops being rhetoric.

And that’s what we’re seeing. 70% of American adults identify as Christian (Pew); it’s virtually impossible for an atheist to get elected to major office; Christian holidays are national holidays. There’s no war on Christians in the US. And the Puritans—the people Christians like to claim as the first founders of the US—prohibited the celebration of Christmas. But the pro-GOP media not only claims there is a war on Christians, but that its base can see signs of this war in their personal life, as when a clerk says “Happy Holidays” instead of “Merry Christmas.” And pro-GOP media continually boosts that sense of threat through networks that prohibit serious discussion of policy, different points of view, or lateral reading.

What all this does is make “conservatives” feel that war-like aggression against “liberals” is justified because it is self-defense.

According to this narrative, the GOP has been unwillingly forced into an absolute war of self-defense. This posture of being forced into an existential war with a demonic foe gives the reactionary right complete moral license. To the extent that they can get their base to believe that they are facing extermination of themselves or “liberals,” there are no legal or moral constraints on them.

And that’s what the myths do. The myths take the very particular and often new categories, practices, beliefs, policies, and project them back through time to origin narratives, so that pundits and politicians can make their base feel existentially threatened every time someone says, “Happy Holidays.”

Beauchamp, Zach. “Yes, the Georgia election law is that bad.” Vox Apr 6, 2021, 1:30pm EDT (Accessed May 17, 2022). https://www.vox.com/policy-and-politics/22368044/georgia-sb202-voter-suppression-democracy-big-lie

von Clausewitz, Carl et al. On War. Eds. And Trans. Michael Eliot Howard and Peter Paret. Princeton, NJ: Princeton University Press, 2008. Print.

Continetti, Matthew. The Right: The Hundred Year War for American Conservatism. New York: Basic Books. 2022. Print.

D’Souza, Dinesh. The Big Lie : Exposing the Nazi Roots of the American Left. Washington, DC: Regnery Publishing, a division of Salem Media Group, 2017. Print.

FiveThirtyEight. “Advantage, GOP.” https://fivethirtyeight.com/features/advantage-gop/ Accessed May 24, 2022.

Howard, Michael. Clausewitz : a Very Short Introduction. Oxford: Oxford University Press, 2002. Print.

Johnson, Paul Elliott. I the People : The Rhetoric of Conservative Populism in the United States. 1st ed. University of Alabama Press, 2022. Print.

Jones, Robert P. (Robert Patrick). White Too Long : the Legacy of White Supremacy in American Christianity. First Simon & Schuster hardcover edition. New York, NY: Simon & Schuster, 2020. Print.

Koomen, Wim., J. van der Pligt, and J. van der (Joop) Pligt. The Psychology of Radicalization and Terrorism. London ;: Routledge, 2016. Print.

Kruglanski, Arie W., Jocelyn J. Bélanger, and Rohan Gunaratna. The Three Pillars of Radicalization : Needs, Narratives, and Networks. New York, NY: Oxford University Press, 2019. Print.

“Lessons from Before Roe: Will Past be Prologue?” The Guttmacher Policy Review, 6:1, March 1, 2003. (Accessed May 16, 2022). https://www.guttmacher.org/gpr/2003/03/lessons-roe-will-past-be-prologue

Levy, Jack S. “Preventive War and Democratic Politics.” International studies quarterly 52.1 (2008): 1–24. Web.

Limbaugh, Rush. “Biden Will Renew Obama’s War on Suburban Property Values.” October 26, 2020. (Accessed May 16, 2022). https://www.rushlimbaugh.com/daily/2020/10/26/biden-will-renew-obamas-war-on-suburban-property-values/

“Rush to the Democrats: Stop the War on Police.” May 4, 2021. (Accessed May 16, 2022)https://www.rushlimbaugh.com/daily/2021/05/04/rush-to-the-democrats-stop-the-war-on-police/

“Rush Sounds the Alarm on the Democrat War on Policing.” April 26, 2021. (Accessed May 16, 2022) https://www.rushlimbaugh.com/daily/2021/04/26/rush-sounds-the-alarm-on-the-democrat-war-on-policing/

“The World War II Challenge We Face.” June 6, 2019. (Accessed May 16, 2022). https://www.rushlimbaugh.com/daily/2019/06/06/our-world-war-ii-challenge/

“There is No Whistleblower, Just a Leaker! We’re in the Midst of a Cold Civil War.” September 27, 2019. (Accessed May 17, 2022). https://www.rushlimbaugh.com/daily/2019/09/27/were-in-the-midst-of-a-cold-civil-war/

“Trump’s Running to Save Us from a Race War Fomented by Democrats.” August 31, 2020. (Accessed May 17, 2022). https://www.rushlimbaugh.com/daily/2020/08/31/trumps-running-to-save-us-from-the-race-war-that-democrats-are-fomenting/

“War on Women! Dems Sponsoring Sex-Trafficking at the Border.” May 26, 2021. (Accessed May 17, 2022) https://www.rushlimbaugh.com/daily/2021/05/26/war-on-women-dems-sponsoring-sex-trafficking-at-the-border/

“What Happened Since I Was Last Here: The Left Sparks a Civil War.” (Accessed May 17, 2022). https://www.rushlimbaugh.com/daily/2018/06/25/what-happened-since-i-was-last-here-left-sparks-civil-war/

Oddo, John. Intertextuality and the 24-Hour News Cycle : a Day in the Rhetorical Life of Colin Powell’s U.N. Address. East Lansing, Michigan: Michigan State University Press, 2014. Print.

Pew Research Center. “Religious Landscape Study.” https://www.pewresearch.org/religion/religious-landscape-study/ Accessed May 24, 2022.

Stewart, Katherine. The Power Worshippers : Inside the Dangerous Rise of Religious Nationalism. New York: Bloomsbury Publishing, 2020. Print.